Introduction

Let's say that we are using Orleans, specifically we leverage the in-memory model of grains as a smart cache. Other grains in the system call into this grain (lets call it CacheGrain) which is keeping this set of cached data. Everything works well and makes life easier because of Orleans' location transparency feature.

However, application usage grows and more and more load is placed on it, therefor we scale out the cluster and more silos join it. More load means more silos, more silos means more calls to CacheGrain, and at some point timeouts will happen.

We are clever though and decide to apply [AlwaysInterleave] on the method which exposes the cached data. This gives us a throughput boost, but at some point even this won't be enough. I haven't even mentioned an important detail which is the fact that grains from other silos will need to do a network trip to the single silo currently hosting the CacheGrain.

Solution 1

Putting current orleans limitations in the back-burner, we begin to explore a solution and certainly we come up with one! Obviously other people have thought of it as-well like this, this, and this.

More or less, they all dribble down to a concept knows as Readers–Writers problem. It refers to the problem in which many concurrent threads of execution try to access the same shared resource at one time, but in our case this is elevated on a distributed runtime, as opposed to a single process, but nevertheless its the same basic idea.

In order to offload our CacheGrain from all the requests, but also eleminate the need to do cross-silo communication, we want to have a single writter i.e. our CacheGrain, and multiple readers i.e. LocalCacheGrain(s) which are local to each silo, and reflect the same contents as the main CacheGrain. Needless to say that the contents of the CacheGrain need to be replicated to all of these LocalCacheGrain(s). I intentionally place the (s) because these will need to be multiple activations, and the word local is also important, since we want locality for the cached data.

Applying [StatelessWorker] attribute on a grain implementation, tells the Orleans runtime to always create an activation on the current silo. This gives us the locality part!

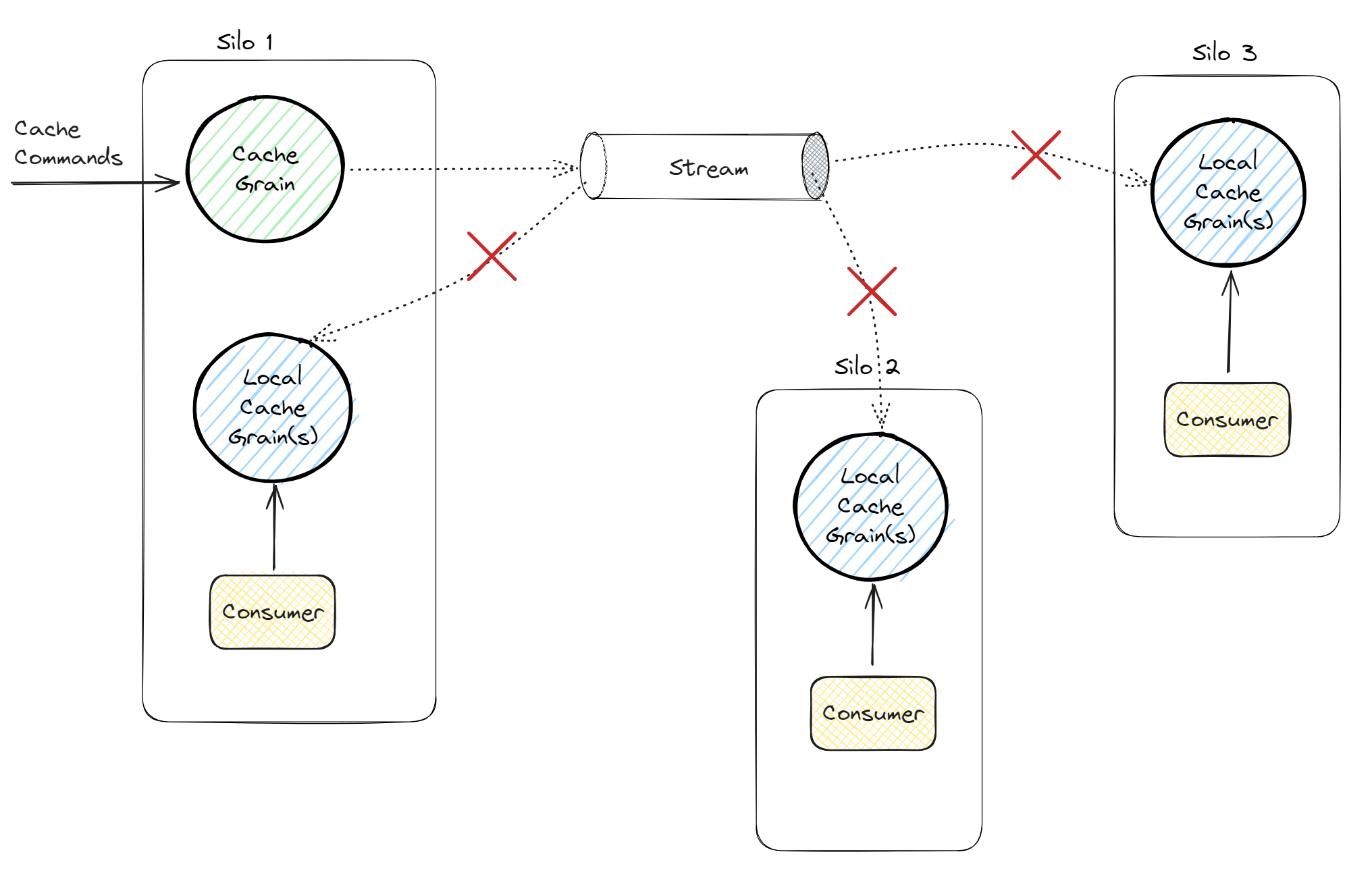

We can imagine a solution that looks like the one depicted in the diagram below.

We can notice several things in the diagram:

- The

CacheGrainreceives commands such as: Get, Add, Delete. - Changes are streamed via Orleans Implicit Streaming to each

LocalCacheGrainin each silo. - Consumer code (whatever it is) calls into the local grains, which are stateless worker grains, which are guaranteed to be local.

There is a problem though!

Orleans sort of supports streaming to stateless workers, because they are grains at the end of the day, but the delivery is unpredictable as they are not remotely and individually addressable. That's why we show the ❌ symbol on the stream connections. A key is used when invoking an activation, but that is meant for logically grouping stateless workers, and not to individually address one such!

A potential solution to this problem is having a pool of reader grains and managing the pool explicitly, via ids. The callers would pick one id randomly and issue request to that grain, another one would pick another grain (with 1/N probability), and so on.

This certainly can work, but I see 2 problems with it:

- How do you decide wether to create 1, 2 or N reader grains?

- You would need to keep track of them, because when the cache is invalidated, you need to reflect that change in all of them.

Solution 2

Using stateless workers would be ideal, because the runtime, not only will it create an activation in each silo. It can (and will) create more activations of the same, even in the same silo, when it detects that the load one is experiencing is high. But we do have the streaming limitation, so we need to get creative. We also want to avoid this need of keeping track of individual once from the pool.

For this reason we can make use of OrleanSpaces. I have already written about it here & here (disclaimer: I am the author). It is a virtual, fully-asynchronous, Tuple Space implementation backed by Orleans. You don't need to know the ins-and-outs of it, but what is important to know is that on every process (wether its a client, or a silo) a so called Space Agent is available.

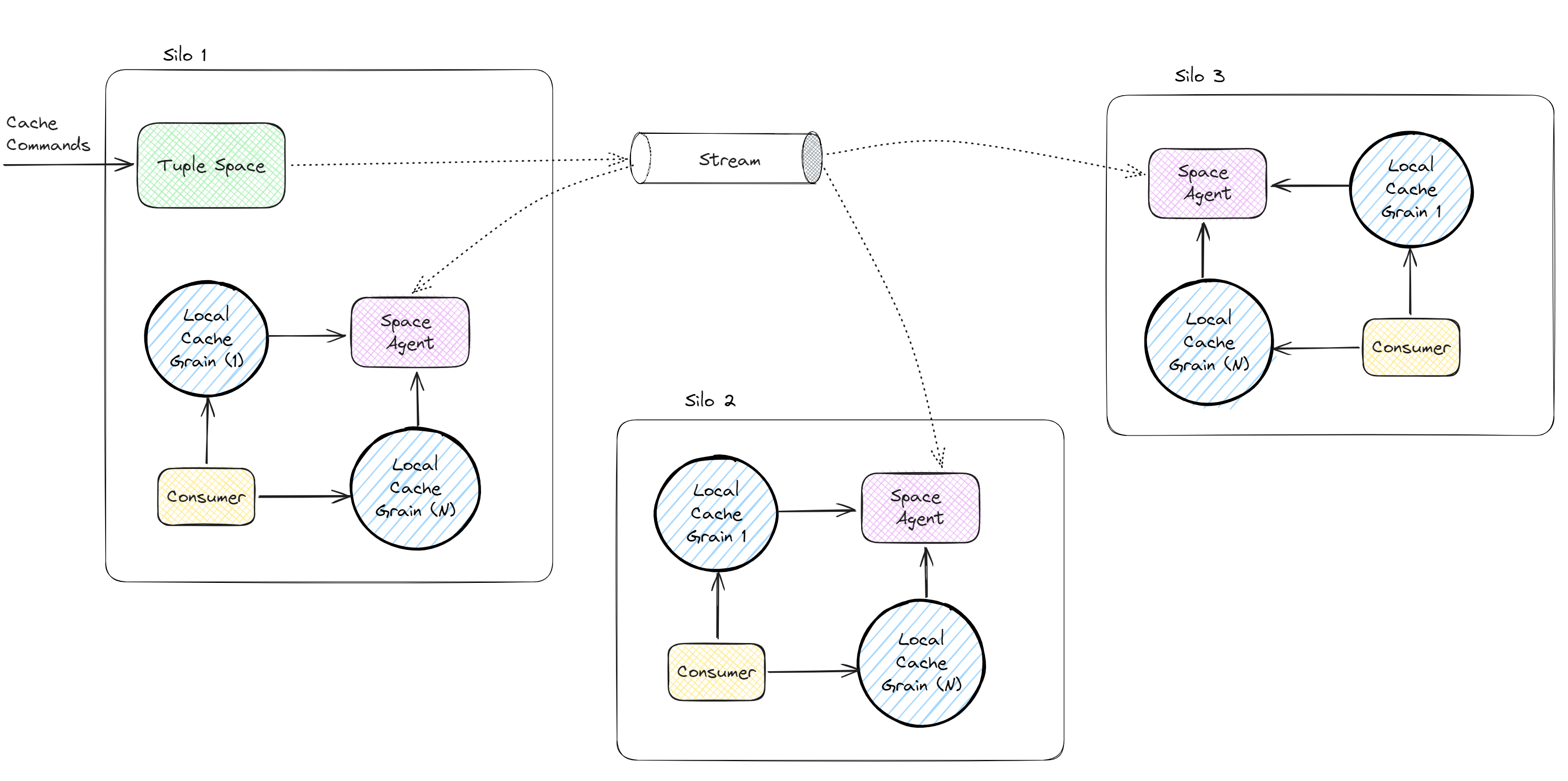

OrleanSpace handles all the syncronization between the tuple space and all agents, so you don't need to worry about that part. Redesigning the system would look like its depicted in the diagram below.

We can notice several things in the diagram:

- The

CacheGrainhas been removed in favor of the Tuple Space, although it doesn't have to be. LocalCacheGrainstays, and its a stateless worker.- The consumer (or multiple), don't need to pick a local grain randomly, they just call the one stateless worker (from their side of view) and Orleans will route to whichever activation has less load.

- The presence of the stateless workers allows us to automatically scale locally as Orleans will increase the activation count of them, accordingly to the CPU cores available in the silo.

- Multiple activations of

LocalCacheGraintalk to their local agent which facilitates all coordination. - There is no need for explicit cache invalidation, as cache entries are tuples which are fully managed by OrleanSpaces.

- Contrary to a manager-like / pool-master grain, the agent is a runtime component which is designed to be called concurrently. A master grain would be subject to Orleans' single-threaded execution model, which would hinder throughput in this case.

Below we can see an example implementation of the LocalCacheGrain, which is simply a wrapper around the ISpaceAgent.

public interface ILocalCacheGrain : IGrainWithIntegerKey

{

ValueTask<object?> GetValue(object key);

Task Add(object key, object value);

Task<object?> RemoveEntry(object key);

}

[StatelessWorker]

public class LocalCacheGrain : Grain, ILocalCacheGrain

{

private readonly ISpaceAgent agent;

public LocalCacheGrain(ISpaceAgent agent)

{

this.agent = agent;

}

public ValueTask<object?> GetValue(object key)

{

var tuple = agent.Peek(new SpaceTemplate(key, null));

return ValueTask.FromResult(tuple.IsEmpty ? null : tuple[1]);

}

public async Task Add(object key, object value)

{

await agent.WriteAsync(new SpaceTuple(key, value));

}

public async Task<object?> RemoveEntry(object key)

{

var tuple = await agent.PopAsync(new SpaceTemplate(key, null));

return tuple.IsEmpty ? null : tuple[1];

}

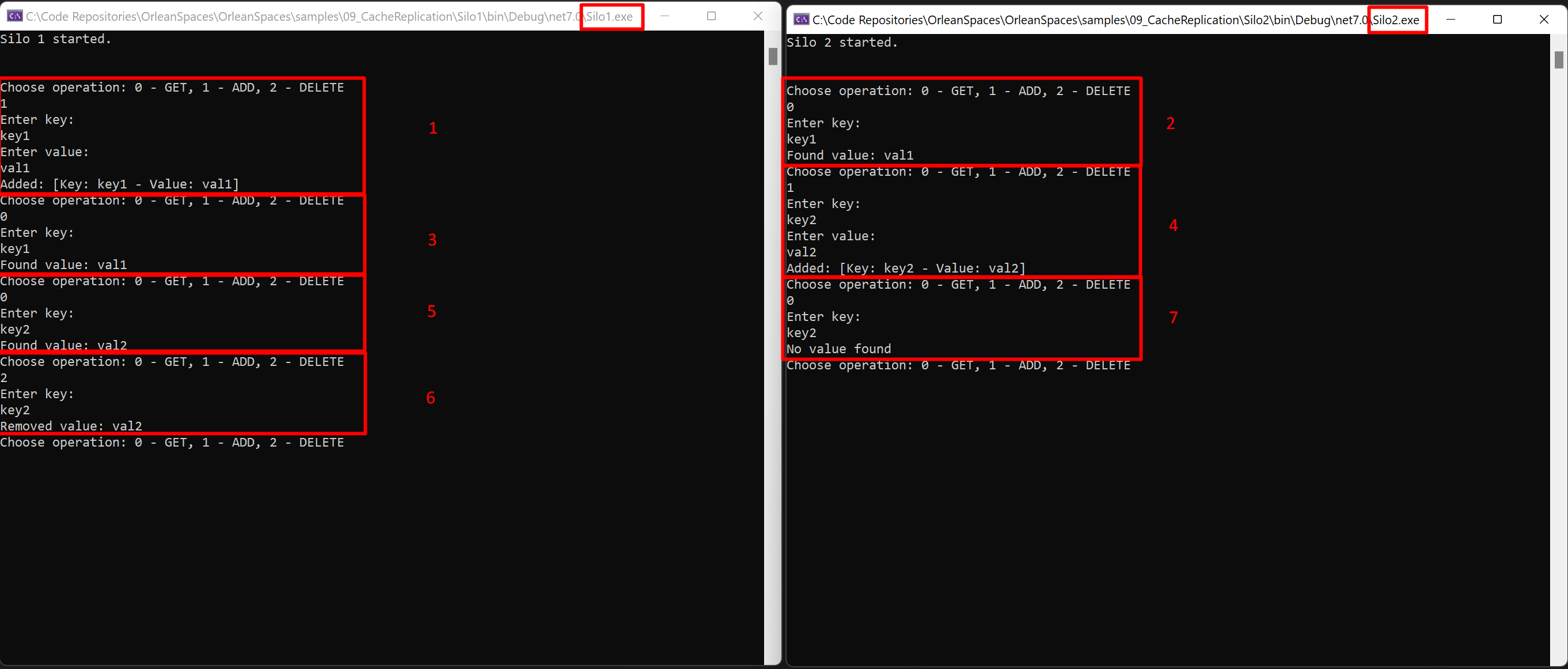

}We ran a cluster of 2 silos, and switched operations between them to simulate cache changes between the 2 silos. The blocks surrounded in red are the actions performed, and the numbers next to them are the order in which they were executed.

- First we added a new entry (key1, val1) in the cache from Silo 1.

- Then we read key1 from Silo 2, and we can see it is present.

- Then we read again key1, but this time from Silo 1, and we can see it is present.

- Then we added a new entry (key2, val2) in the cache, this time from Silo 2.

- Then we read key2 from Silo 1, and we can see it is present.

- Then we removed key2 from Silo 1.

- Then we read key2 from Silo 2, but we can see it is not present. It was removed in step 6, by Silo 1.

Additional Information

We saw above how OrleanSpaces handled it all transparently without us having to do major coordination tasks. But that is only the tip of the iceberg! The LocalCacheGrain is a simple wrapper around ISpaceAgent, and calls into it. Albeit all inside the same process, and concurrently, but if that is a concern to you, OrleanSpaces offers the possibility of creating so called Space Observers which can be attached during runtime. These observers receive notifications in parallel and can maintain a copy of the cache inside them.

For example our LocalCacheGrain could have been written the following way:

[StatelessWorker]

public class LocalCacheGrain : Grain,

ILocalCacheGrain, ISpaceObserver<SpaceTuple>

{

private Guid subId;

private readonly SpaceTemplate cacheTemplate = new(null, null);

private readonly ISpaceAgent agent;

private readonly Dictionary<object, object> cache = new();

public LocalCacheGrain(ISpaceAgent agent)

{

this.agent = agent;

}

public override Task OnActivateAsync(

CancellationToken cancellationToken)

{

subId = agent.Subscribe(this);

foreach (var tuple in agent.Enumerate())

{

// if you use the tuple space only for this

// cache function, you can ommit template matching

if (cacheTemplate.Matches(tuple))

{

cache.TryAdd(tuple[0], tuple[1]);

}

}

return Task.CompletedTask;

}

public override Task OnDeactivateAsync(

DeactivationReason reason,

CancellationToken cancellationToken)

{

agent.Unsubscribe(subId);

cache.Clear();

return Task.CompletedTask;

}

public ValueTask<object?> GetValue(object key)

{

return ValueTask.FromResult(cache.GetValueOrDefault(key));

}

public async Task Add(object key, object value)

{

await agent.WriteAsync(new SpaceTuple(key, value));

cache.TryAdd(key, value);

}

public async Task<object?> RemoveEntry(object key)

{

var tuple = await agent.PopAsync(new SpaceTemplate(key, null));

if (tuple.IsEmpty)

{

return null;

}

cache.Remove(key);

return tuple[1];

}

Task ISpaceObserver<SpaceTuple>.OnExpansionAsync(

SpaceTuple tuple,

CancellationToken cancellationToken)

{

cache.TryAdd(tuple[0], tuple[1]);

return Task.CompletedTask;

}

Task ISpaceObserver<SpaceTuple>.OnContractionAsync(

SpaceTuple tuple,

CancellationToken cancellationToken)

{

cache.Remove(tuple[0]);

return Task.CompletedTask;

}

Task ISpaceObserver<SpaceTuple>.OnFlatteningAsync(

CancellationToken cancellationToken)

{

cache.Clear();

return Task.CompletedTask;

}

}It does have the benefit that it servers reads somewhat faster, but local deletions would still need to be notified to the other silos, so a call to the agent would still be neccessary. It also means that the more activations of the LocalCacheGrain you have, the more memory would be consumed as each keeps a copy of the cache internally (multiplied by the number of silos across the cluster).

So overall, I would shy away from this approach. Eventhough If you wanted to keep a history (for whatever reason) of the cache that is append-only, you could create a sepparate grain that subscribes to expansion notifications. That I would endorse, but it is out of scope for this article.

If you are wondering on where all this information would be stored, the answer to that is: wherever you want it to be! This is because OrleanSpace is build upon Orleans, therefor it inherits its storage model, as a matter of fact, one can run this whole thing in-memory which might very well be suitable in this scenario.

Above we mentioned that the agents are created also in any kind of client process (not just silo), so you can extend cache replication even from/to clients, as long as they are dotnet applications.

Another very imporant thing to mention is that the tuple space has limitations on what type of objects you can store in it. But as long as you can represent it into a string format, you can store any information.

In addition, if you can either serialize it to binary format, or directly encode the information via primitives like: byte, int, etc. Than I would highly suggest to inject the specialized agent type ISpaceAgent<T, TTuple, TTemplate>, which provides substantially lower allocations, and way faster lookup speeds as it highly leverages SIMD.

Closing Note

Last thing to say is that OrleanSpaces can be used in a multitude of ways, it all depends on the creativity of the user. The example application can be found in the samples folder on the GitHub repository.

If you found this article helpful please give it a share in your favorite forums 😉.